How I Exposed Microservices on AWS EKS: Two Ways That Actually Work

A few months back, I deployed a bunch of microservices to EKS. Everything worked perfectly inside the cluster. Then someone asked: “How do users actually access this?”

Good question.

My services were running happily in pods, completely isolated from the internet. I needed a way to get traffic in, handle SSL, and route requests to the right service.

I’ve done this two ways in production. Let me show you both.

The Core Problem

Your pods are inside a cluster. Users are on the internet. You need something in between that:

Accepts HTTPS traffic from users

Terminates SSL (decrypts the traffic)

Routes requests to the right service

Scales automatically

This is where AWS load balancers and Kubernetes Ingress come together.

Think of it like this: Ingress is the rulebook that says “send /users to user-service, send /orders to order-service.” The load balancer is the bouncer at the door who reads that rulebook and enforces it.

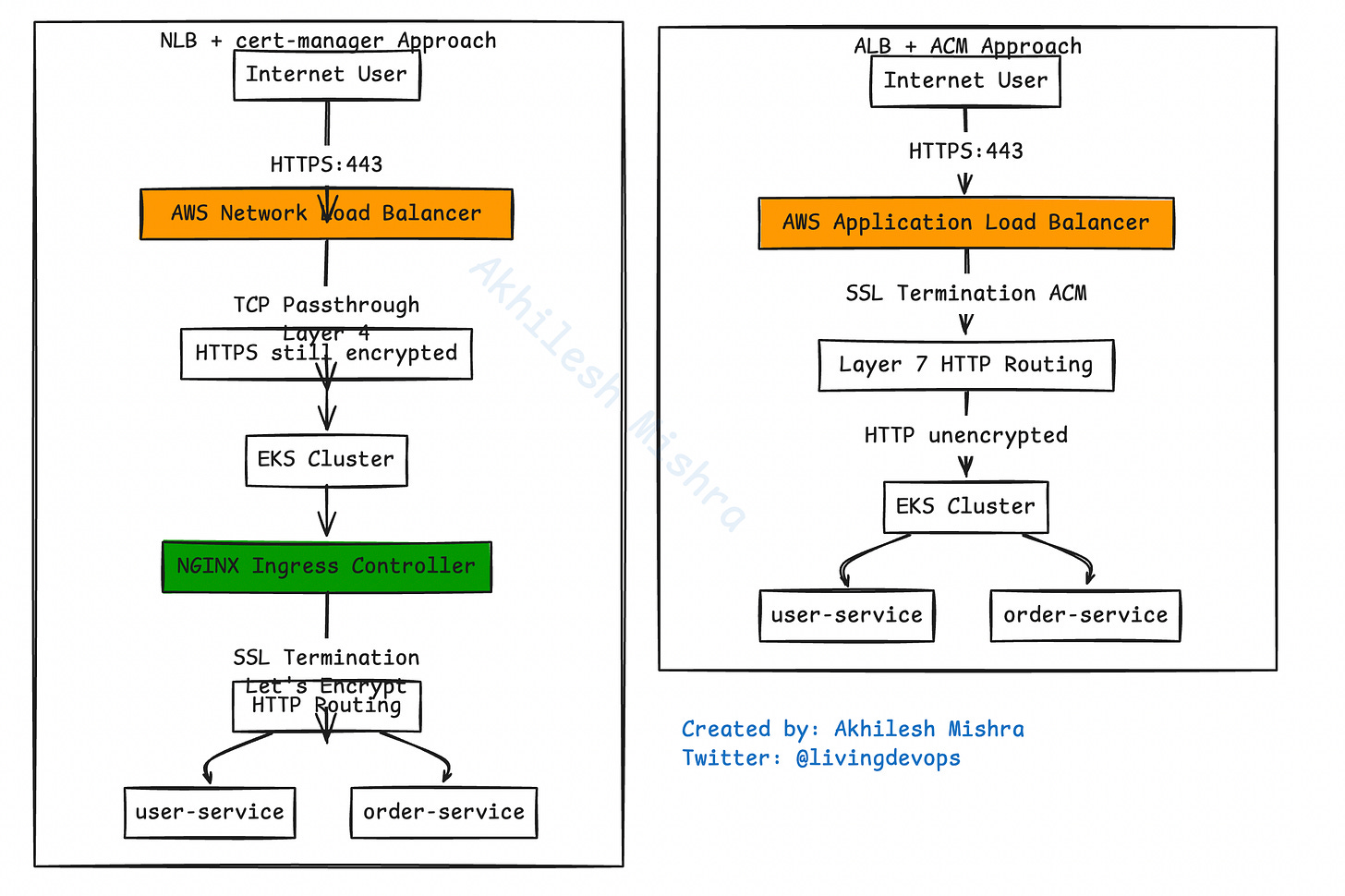

Approach 1: Let AWS Handle Everything (ALB + ACM)

This is the AWS-native way. Simple and clean.

Here’s how traffic flows:

User (HTTPS)

↓

AWS Application Load Balancer

(Decrypts SSL, reads the path)

↓

Routes to correct pod based on path

↓

Your microservice podThe magic happens through something called the AWS Load Balancer Controller. It’s a pod running in your cluster that watches for Ingress resources and automatically creates ALBs for you.

Here’s the architecture:

Internet → ALB (SSL termination) → Your Pods (HTTP)The ALB sits outside your cluster, managed by AWS. You just tell Kubernetes what you want, and the controller makes it happen.

How it actually works:

You create an Ingress resource in Kubernetes with some special annotations. The controller sees it, calls AWS APIs, and creates an Application Load Balancer with all the right settings.

For SSL, you use AWS Certificate Manager (ACM). Request a certificate for your domain, validate it through DNS, and you’re done. ACM handles renewal automatically. You literally never think about it again.

The Ingress looks something like this (simplified):

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-api

annotations:

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:...

alb.ingress.kubernetes.io/listen-ports: ‘[{”HTTPS”: 443}]’

spec:

rules:

- host: api.yourdomain.com

http:

paths:

- path: /users

backend:

service:

name: user-service

- path: /orders

backend:

service:

name: order-serviceYou apply this, wait 2 minutes, and you have a fully functioning ALB with SSL.

What I like about this:

AWS manages everything hard. Certificate renewal? Automatic. Load balancer scaling? Automatic. Health checks? Configured for you.

The ALB understands HTTP, so it can route based on paths, hostnames, headers — anything at the application layer.

The catch:

You’re locked into AWS. If you ever move to another cloud, you’re rewriting this part.

Also, ALB only does HTTP/HTTPS. If you need raw TCP or UDP, you’re out of luck.

Approach 2: Control Everything Yourself (NLB + cert-manager)

This approach brings certificate management into Kubernetes. More control, more responsibility.

Here’s how traffic flows:

User (HTTPS)

↓

AWS Network Load Balancer

(Just forwards TCP packets, doesn’t decrypt)

↓

NGINX Ingress Controller inside cluster

(Decrypts SSL, reads the path)

↓

Routes to correct pod

↓

Your microservice podThe key difference: the NLB doesn’t understand HTTP. It’s a Layer 4 load balancer that just forwards TCP packets. SSL termination happens inside your cluster at the NGINX Ingress Controller.

Here’s the architecture:

Internet → NLB (TCP passthrough) → NGINX (SSL termination) → Your Pods (HTTP)For certificates, you use cert-manager. It’s a Kubernetes controller that talks to Let’s Encrypt and automatically gets you free SSL certificates.

How certificate automation works:

You create an Ingress with a cert-manager annotation. cert-manager sees it and thinks: “Oh, they need a certificate for api.yourdomain.com.”

It then talks to Let’s Encrypt:

cert-manager: “I need a certificate for api.yourdomain.com”

Let’s Encrypt: “Prove you own that domain. Serve this specific file at this URL.”

cert-manager creates a temporary pod to serve that file

Let’s Encrypt checks the URL, sees the file

Let’s Encrypt: “Cool, here’s your certificate”

cert-manager stores the certificate in a Kubernetes Secret

NGINX reads that Secret and uses it for SSL

This whole dance happens automatically. You just create the Ingress and wait a minute.

The Ingress looks like this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-api

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

spec:

tls:

- hosts:

- api.yourdomain.com

secretName: api-tls-cert

rules:

- host: api.yourdomain.com

http:

paths:

- path: /users

backend:

service:

name: user-service

- path: /orders

backend:

service:

name: order-serviceWhat I like about this:

You’re not locked into AWS. This exact setup works on GCP, Azure, or any Kubernetes cluster.

NLB is cheaper than ALB at high scale and has lower latency.

You get end-to-end encryption all the way to your cluster boundary.

It handles any protocol — HTTP, HTTPS, WebSockets, gRPC, raw TCP.

The catch:

More moving parts. You’re managing cert-manager, NGINX, and the certificate lifecycle.

If certificate renewal fails, you need to debug it. With ACM, AWS handles that.

The Real Differences

Where SSL gets decrypted:

ALB approach: SSL stops at the load balancer outside your cluster. Traffic inside your VPC is unencrypted HTTP.

NLB approach: SSL comes all the way into your cluster to NGINX. More secure, but you manage it.

Who manages certificates:

ALB approach: AWS Certificate Manager. Set it and forget it.

NLB approach: cert-manager and Let’s Encrypt. Automated, but you’re responsible if something breaks.

Cost:

Pretty similar for most workloads. NLB is slightly cheaper at very high scale.

Complexity:

ALB approach: Two components (ALB Controller, Ingress). Simple.

NLB approach: Three components (NGINX, cert-manager, Ingress). More complex, but not dramatically.

Which One Should You Use?

I’ve run both in production. Here’s my honest take:

Use ALB + ACM if you’re staying on AWS and want the simplest life. Let AWS handle the hard stuff. This is what most companies do.

Use NLB + cert-manager if you care about portability, need non-HTTP protocols, or want full control over SSL configuration.

Most teams start with ALB because it’s easier. Some eventually move to NLB as they scale and need more control.

Both work. Both are production-ready. Both handle massive traffic.

The important part isn’t which one you choose. It’s understanding how traffic flows from the internet to your pods, where SSL terminates, and who’s responsible for what.

Once you understand that, the implementation is just YAML and waiting a few minutes.